Cutting Your Teeth on FMOD Part 5: Real-time streaming of programmatically-generated audio

This article was inspired by David Gouveia – thanks for the suggestion!

In this article, we shall look at how to generate sounds programmatically using a function which generates each sample one by one, and how to feed these samples into the audio buffer of a playing stream in real-time.

Note: The SimpleFMOD library contains all of the source code and pre-compiled executables for the examples in this series.

What are sound samples?

Figure 1. How a sine wave is represented by sound samples (the red dots) (source: streaming.stanford.edu)

Audio is stored on computers as a series of discrete numbers which are known as samples. Each sample represents nothing more than the volume (sometimes known as the amplitude) of the sound at a specific moment in time.

When you hear a pure tone, for example the dots and dashes of morse code, or a smoke detector which makes a single high-pitch noise when it goes off, you are usually listening to the audio representation of a sine wave. This is generally regarded as the simplest kind of sound. Figure 1 shows how such a sound may be represented by samples. The horizontal axis represents time and the vertical axis represents volume. The black curve shows the sine wave itself, while the red dots show the values of the samples. Hence, you can see how samples got their name: when analog sound is converted to digital, the sound is sampled at fixed time intervals to create an approximation of the original sound.

Notice the difference between the top and bottom graphs: in the bottom graph, the sound wave is sampled twice as often, giving a more accurate digital representation. The number of times a sample is taken per second is called the sample frequency or sample rate, using hertz (Hz) as the unit of measurement. So in the diagrams in Figure 2, the top sine wave has been sampled at 8Hz and the bottom one at 16Hz.

In real life, we need far higher sampling rates than this to reproduce audio accurately on a digital device. The standard for CDs (so-called “CD quality sound”) is 44100 Hz, while Super Audio CDs (SACDs) use a sample rate of 48000 Hz, ie. 48 thousand samples per second.

Figure 2. A square wave

How rapidly the sine wave repeats determines the pitch or frequency of the note. For example, if the sine wave repeats 800 times per second, you get a note playing at 800 Hz. By way of example, the frequency of middle C on a standard piano is around 261.626 Hz.

Sine waves aren’t the only way to produce notes, and different types of repeating pattern produce different types of sound. Figure 2 shows a square wave (repeated 3 times). If you listen to a square wave at 261.626 Hz, you will still be listening to middle C, but the note will sound more harsh to the human ear.

We’ll look at the maths of creating some basic wave types below.

Sound formats

A sound format refers to the way the samples are stored in a piece of audio: the range of numbers which can be used for the volume (and consequently, how many bytes each sample requires), the order the samples are arranged in, and so on.

The volume range allowed is extremely important to accurately reproducing the desired audio (when I talk about volume range, I mean the number of steps in volume available between silent and maximum volume). Clearly, if you only have a choice of 30 volumes, sampling is going to be much less accurate then if you have a choice of 1000 (think about the sine wave in Figure 1 again: the vertical position of the red dot samples is constrained by how many different volume levels can be selected by the sampler; the more volume levels available, the closer each dot can be to the sine wave, and the more accurate the digital representation becomes).

8-bit samples use a single byte (of 8 bits) to store each volume. Since a byte can only store numbers from 0-255, this gives us 256 potential volume levels to sample at. 16-bit samples use 2 bytes (of 8 bits each) to store the volume. With two bytes, we can store numbers from 0-65535 for a total of 65536 potential volume levels. This is the format used by CDs and most MP3s. Note that some modern amplifiers and home cinemas support 24-bit sound, giving 16.7 million volume levels for the highest fidelity re-production.

FMOD supports all of the sound formats you will need, with two that are of particular interest to us:

- FMOD_SOUND_FORMAT_PCM16 – this is the standard, uncompressed 16-bit sample format as described above, where each sample occupies 2 bytes and is a number from -32768 to +32767 (for a total of 65536 volume levels, where 0 is silent and the positive and negative maximums are maximum volume).

- FMOD_SOUND_FORMAT_PCMFLOAT – this is an uncompressed 32-bit sample format. Each sample occupies 4 bytes, but these 4 bytes contain a single floating point number with a (decimal/fractional) value from -1.0 to +1.0 (where 0 is silent and -1 and +1 are maximum volume), rather than a whole number.

When we create the audio buffer that we’ll fill with samples, we have to specify the format to use so that FMOD knows what type of data we are filling it with. We shall use FMOD_SOUND_FORMAT_PCM16 in the examples here.

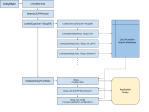

The Process

A picture speaks a thousand words, so take a look at Figure 3 to see how the process of generating samples, pushing them into an audio buffer and playing it back will hang together:

Figure 3. The execution flow of an application which generates audio in real-time and feeds it into the audio buffer being played by FMOD.

We first create an audio buffer, specifying such details as the sample rate, number of channels, sound format (as described above) and the total buffer size. We also specify a function – known as a callback – which FMOD calls when the audio buffer is running low on samples to fill it up with new audio data (new samples).

The callback is where we will actually generate the audio. Naturally, we want to fill the buffer ahead of time before new samples are needed, and FMOD wants us to do this in fixed-size blocks. We therefore need to keep track of how far we are into the generation of our desired audio, since the portion of the buffer we are filling bears no relation to the current playback position.

FMOD keeps playing the audio even as the callback to fill the buffer is executing, and the callback itself is not tied to or called at any point directly by your application – only by FMOD itself – hence the need for 3 threads. Fortunately, you don’t have to worry about the multi-threading code as this is all handled for you automatically by FMOD.

The simplest possible example

Initialize FMOD

First, initialize FMOD as described in Cutting Your Teeth on FMOD Part 1: Build environment, initialization and playing sounds.

Describe the audio buffer

To create an audio buffer, we use the FMOD_CREATESOUNDEXINFO struct which can be passed into FMOD::System::createSound() or FMOD::System::createStream():

// The sample rate, number of channels and total time in seconds before the gnerated sample repeats int sampleRate = 44100; int channels = 2; int lengthInSeconds = 5; // Set up the sound FMOD_CREATESOUNDEXINFO soundInfo; memset(&soundInfo, 0, sizeof(FMOD_CREATESOUNDEXINFO)); soundInfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO); // The number of samples to fill per call to the PCM read callback (here we go for 1 second's worth) soundInfo.decodebuffersize = sampleRate; // The length of the entire sample in bytes, calculated as: // Sample rate * number of channels * bits per sample per channel * number of seconds soundInfo.length = sampleRate * channels * sizeof(signed short) * lengthInSeconds; // Number of channels and sample rate soundInfo.numchannels = channels; soundInfo.defaultfrequency = sampleRate; // The sound format (here we use 16-bit signed PCM) soundInfo.format = FMOD_SOUND_FORMAT_PCM16; // Callback for generating new samples soundInfo.pcmreadcallback = PCMRead; // Callback for when the user seeks in the playback soundInfo.pcmsetposcallback = PCMSetPosition;

As you can see, the length of the audio buffer is defined by length (in bytes), and the number of samples to fill per callback is set in decodebuffersize. The callback which will do the sound generation is specified in pcmreadcallback. The sound format is set in format – here we’ve gone for 16-bit PCM data as described above.

Create the audio buffer

Before you create the audio buffer you may wish to increase FMOD’s stream buffer size from the default of 16384 bytes (16K). Optionally:

fmod->setStreamBufferSize(65536, FMOD_TIMEUNIT_RAWBYTES); // 64K in this example

To create the audio buffer, you must supply the FMOD_OPENUSER mode flag to indicate this will be user-generated sound, and pass in the audio buffer description created above:

FMOD::Sound *sound; fmod->createStream(nullptr, FMOD_OPENUSER, &soundInfo, &sound);

Start playback

If you want to check the position or playback state of the sound elsewhere in your application, you’ll also need to get the sound’s channel, which means starting playback paused:

FMOD::Channel *channel; fmod->playSound(FMOD_CHANNEL_FREE, sound, true, &channel);

If the audio is to loop indefinitely, set the looping mode as appropriate:

channel->setLoopCount(-1); channel->setMode(FMOD_LOOP_NORMAL); channel->setPosition(0, FMOD_TIMEUNIT_MS); // this flushes the buffer to ensure the loop mode takes effect

Note you can also set FMOD_LOOP_NORMAL in the creation mode flags when you call createStream() if you prefer.

Finally, unpause the audio:

channel->setPaused(false);

If you don’t care about looping or retrieving the channel, or you have set the loop mode when you called createStream(), you can forego all of the above sorcery and just start playback normally:

fmod->playSound(FMOD_CHANNEL_FREE, sound, false, nullptr);

Per-frame update and application work

It’s vital to call FMOD::System::update() frequently as your application runs:

bool quit = false;

while (!quit)

{

fmod->update();

// do the rest of your application's work here

if (GetAsyncKeyState('Q'))

quit = true;

}

This primitive example merely allows playback to continue indefinitely until the user presses Q, causing the while loop to exit.

Clean up

Once you’re done, tidy up your resources:

sound->release(); fmod->release();

Now for the tricky part: generating the actual audio.

Implementing the callback function

We’ll now use what we learned in our examination of samples at the beginning to write a function which generates a sine wave of a specified frequency and volume, at a sample rate which matches that of our audio buffer.

We start by defining some values:

// Generate new samples

// We must fill "length" bytes in the buffer provided by "data"

FMOD_RESULT F_CALLBACK PCMRead(FMOD_SOUND *sound, void *data, unsigned int length)

{

// Sample rate

static int const sampleRate = 44100;

// Frequency to generate (Hz)

static int const frequency = 800;

// Volume level (0.0 - 1.0)

static float const volume = 0.3f;

// How many samples we have generated so far

static int samplesElapsed = 0;

We’re going to generate a sine wave playing a note at 800 Hz, with a maximum volume of about one-third (pure sine waves sound remarkably loud), at a sample rate matching that of our buffer. We need the sample rate because we have to know how many samples we should generate for a single repetition of the sine wave. If the buffer uses a sample rate of 44100 Hz and we want a sine wave at 441 Hz, for example, then it is fairly obvious that we will need to generate precisely 100 samples per repetition of the sine wave, giving us 441 repetitions per 44100 samples, ie. 441 repetitions per second, ie. a sine wave frequency of 441 Hz.

Notice that we keep a running count of the number of samples we’ve generated in samplesElapsed so that we know how far into the sine wave we are. This value will be incremented every time we generate a new sample.

Next, we cast the void pointer we received from FMOD into a pointer to the correct sound format we are using. Our linear 16-bit PCM sound format stores data as signed shorts (16-bit values ranging from -32768 to +32767), so we cast like this:

// Get buffer in 16-bit format signed short *buffer = (signed short *)data;

If we were using the 32-bit PCM floating point format, we would cast like this instead:

float *buffer = (float *)data;

Great! Now we’re ready to fill the buffer with data.

Filling the audio buffer

FMOD has indicated to us how many bytes of the buffer should be filled, but we are going to work one sample at a time, so what we really need to know is how many samples to generate. Since we know the sound format is 16-bit (2 bytes per sample), we can simply divide the fill length by 2 to get the number of samples. However, we have also specified that the audio buffer uses 2 channels (for stereo playback; a 1-channel buffer would be mono playback), so we actually need 2 samples per unit of time, one for the left channel and one for the right. Thus, the actual number of bytes used for a single unit of time is 4, and we divide the fill length by 4 to get the number of sample pairs (left and right) to generate.

If you were using 32-bit PCM floating point format, each sample requires 4 bytes, so a 2-channel audio buffer will require 8 bytes per sample pair.

Armed with this information, we use a loop to generate as many sine wave samples as needed:

// A 2-channel 16-bit stereo stream uses 4 bytes per sample

for (unsigned int sample = 0; sample < length / 4; sample++)

{

// Get the position in the sample

double pos = frequency * static_cast<float>(samplesElapsed) / sampleRate;

// The generator function returns a value from -1 to 1 so we multiply this by the

// maximum possible volume of a 16-bit PCM sample (32767) to get the true volume to store

// Generate a sample for the left channel

*buffer++ = (signed short)(sin(pos * M_PI*2) * 32767.0f * volume);

// Generate a sample for the right channel

*buffer++ = (signed short)(sin(pos * M_PI*2) * 32767.0f * volume);

// Increment number of samples generated

samplesElapsed++;

}

Okay, let’s step through this and see what is happening.

First, we figure out our position in the sine wave. Considering samplesElapsed / sampleRate in isolation first, if the frequency of the sine wave was 1 Hz (one repetition per second), this would give us a decimal value from 0 to 1 indicating how far (in percent) we are into it (on the horizontal axis). Multiplying this value by the desired frequency gives us a decimal value from 0 to frequency, which, if we just take the decimal part of it and ignore the whole number part, gives us the percentage we are into the sine wave of the desired frequency (the whole number part indicates how many whole repetitions we have gone through).

We then pass this value to the C++ standard math function sin(), multiplied by PI*2 since sin() works in radians. If you are not familiar with this, simply know that while sines calculated from degrees take an input in degrees from 0-359, sines calculated from radians take an input in radians from 0 – PI*2. The behaviour and result is the same, it is simply the scale of the input value which is different; since we have calculated our position in the sine wave as a value from 0-1, we multiply it by PI*2 to scale it up to the length of a single sine wave (which is 360 degrees, or PI*2 radians). Ultimately, sin() returns a value from -1 to 1 indicating the vertical position on the sine curve for the specified horizontal position (which we specified in radians as the input), where 0 is the centre point and 1 and -1 are the upper and lower peaks of the curve respectively.

To scale the value we have obtained to be constrained within the bounds of our desired maximum volume, all we have to do is multiply the result by volume, since our result is between -1 and 1. For example, with our test volume of 0.3, the maximums will become -0.3 and +0.3, which is what we want.

If you are using the 32-bit PCM floating point sound format, you have at this point a value from -1 to 1 indicating the volume of the sample, and you can simply store it in the buffer. For linear 16-bit PCM data, we need to do one more step, which is to scale the calculated volume (of -1 to 1) by the maximum volume that can be stored in a 16-bit signed value, thereby transforming the range -1 to 1 into a range of -32767 to +32767 instead.

In our example, we store the same value twice in the buffer: once for the left channel and once for the right channel. Notice the ordering: for a given time unit, the left channel’s sample is stored first, then the right channel’s sample.

Finally, we increment the number of samples generated and repeat the process all over again until we have generated the desired amount of samples.

Your callback function should return FMOD_OK when finishing:

return FMOD_OK; }

Minimal example source code

The following source code puts everything together into a minimalist example (note: the code uses SimpleFMOD to simplify initialization and audio buffer creation), and uses code from the official demo to show playback information:

#include "../SimpleFMOD/SimpleFMOD.h"

#include <functional>

#include <memory>

#include <iostream>

using namespace SFMOD;

// Generate new samples

// We must fill "length" bytes in the buffer provided by "data"

FMOD_RESULT F_CALLBACK PCMRead(FMOD_SOUND *sound, void *data, unsigned int length)

{

// Sample rate

static int const sampleRate = 44100;

// Frequency to generate (Hz)

static int const frequency = 800;

// Volume level (0.0 - 1.0)

static float const volume = 0.3f;

// How many samples we have generated so far

static int samplesElapsed = 0;

// Get buffer in 16-bit format

signed short *stereo16BitBuffer = (signed short *)data;

// A 2-channel 16-bit stereo stream uses 4 bytes per sample

for (unsigned int sample = 0; sample < length / 4; sample++)

{

// Get the position in the sample

double pos = frequency * static_cast<float>(samplesElapsed) / sampleRate;

// The generator function returns a value from -1 to 1 so we multiply this by the

// maximum possible volume of a 16-bit PCM sample (32767) to get the true volume to store

// Generate a sample for the left channel

*stereo16BitBuffer++ = (signed short)(sin(pos * M_PI*2) * 32767.0f * volume);

// Generate a sample for the right channel

*stereo16BitBuffer++ = (signed short)(sin(pos * M_PI*2) * 32767.0f * volume);

// Increment number of samples generated

samplesElapsed++;

}

return FMOD_OK;

}

FMOD_RESULT F_CALLBACK PCMSetPosition(FMOD_SOUND *sound, int subsound, unsigned int position, FMOD_TIMEUNIT postype)

{

// If you need to process the user changing the playback position (seeking), do it here

return FMOD_OK;

}

// Program entry point

int main()

{

// The sample rate, number of channels and total time in seconds before the gnerated sample repeats

int sampleRate = 44100;

int channels = 2;

int lengthInSeconds = 5;

// Set up FMOD

SimpleFMOD fmod;

// Set up the sound

FMOD_CREATESOUNDEXINFO soundInfo;

memset(&soundInfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

soundInfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO);

// The number of samples to fill per call to the PCM read callback (here we go for 1 second's worth)

soundInfo.decodebuffersize = sampleRate;

// The length of the entire sample in bytes, calculated as:

// Sample rate * number of channels * bits per sample per channel * number of seconds

soundInfo.length = sampleRate * channels * sizeof(signed short) * lengthInSeconds;

// Number of channels and sample rate

soundInfo.numchannels = channels;

soundInfo.defaultfrequency = sampleRate;

// The sound format (here we use 16-bit signed PCM)

soundInfo.format = FMOD_SOUND_FORMAT_PCM16;

// Callback for generating new samples

soundInfo.pcmreadcallback = PCMRead;

// Callback for when the user seeks in the playback

soundInfo.pcmsetposcallback = PCMSetPosition;

// Create a user-defined sound with FMOD_OPENUSER

Song sound = fmod.LoadSong(nullptr, nullptr, FMOD_OPENUSER, soundInfo);

// Start playback and get the channel and a reference to the sound

FMOD::Channel *channel = sound.Start();

// Print instructions

std::cout <<

"FMOD Sound Generator Demo - (c) Katy Coe 2013 - www.djkaty.com" << std::endl <<

"==============================================================" << std::endl << std::endl <<

"Press:" << std::endl << std::endl <<

" P - Toggle pause" << std::endl <<

" Q - Quit" << std::endl << std::endl;

bool quit = false;

while (!quit)

{

// Update FMOD

fmod.Update();

// Print statistics

if (channel)

{

unsigned int ms;

unsigned int lenms;

bool paused;

paused = sound.GetPaused();

channel->getPosition(&ms, FMOD_TIMEUNIT_MS);

sound.Get()->getLength(&lenms, FMOD_TIMEUNIT_MS);

printf("Time %02d:%02d:%02d/%02d:%02d:%02d : %s\r", ms / 1000 / 60, ms / 1000 % 60, ms / 10 % 100, lenms / 1000 / 60, lenms / 1000 % 60, lenms / 10 % 100, paused? "Paused " : "Playing");

}

// P - Toggle pause

if (GetAsyncKeyState('P'))

{

sound.TogglePause();

while (GetAsyncKeyState('P'))

;

}

// Q - Quit

if (GetAsyncKeyState('Q'))

quit = true;

}

}

Generating different kinds of sounds

The following functions take the value we calculated in pos above with the whole number part removed (pos - floor(pos)), and return a value from -1 to 1 indicating the amplitude of the sample to create:

// Sine wave

static double Sine(double samplePos)

{

return sin(samplePos * M_PI*2);

}

// Sawtooth

static double Sawtooth(double samplePos)

{

if (samplePos == 0)

return 0.f;

return (2.f / samplePos) - 1.f;

}

// Square wave

static double Square(double samplePos)

{

return (samplePos < 0.5f? 1.f : -1.f);

}

// White noise ("static")

static double WhiteNoise(double samplePos)

{

return static_cast<double>(rand()) / RAND_MAX;

}

You can simply replace the call to sin() in the callback we created earlier to a call to one of these functions instead.

Using a class member as an FMOD callback function

The least troublesome way I found to do this is to set the optional user data you can specify when you populate FMOD_CREATESOUNDEXINFO to a pointer to the object you wish to use, for example:

AudioClassWithCallback ac; soundInfo.pcmreadcallback = &AudioClassWithCallback::PCMRead; soundInfo.userdata = ∾

or if you create the structure from within the object itself:

soundInfo.pcmreadcallback = PCMRead; soundInfo.userdata = this;

Declare the callbacks as static, and at the start of the callback function, add code to query the user data to get the object pointer like this:

FMOD_RESULT AudioClassWithCallback::PCMRead(FMOD_SOUND *sound, void *data, unsigned int length)

{

// Get the object we are using

// Note that FMOD_Sound * must be cast to FMOD::Sound * to access it

AudioClassWithCallback *me;

((FMOD::Sound *) sound)->getUserData((void **) &me);

// ...

You can now access all the members of the object supplied in userdata via the me pointer.

A more complex example

The source code below shows how to wrap an entire sound generator into a class object. Creating an instance of the object generates an audio buffer of the specified sample rate, number of channels and length in time units, and will generate a sound at the specified frequency and volume using an arbitrary function supplied in the constructor arguments. This function can be changed at any time (although there will be some lag as existing samples in the buffer are played back). When the object goes out of scope, the sound resource is released cleanly. The example allows you to switch between generation of the four sound types outlined above.

#include "../SimpleFMOD/SimpleFMOD.h"

#include <functional>

#include <memory>

#include <iostream>

using namespace SFMOD;

// Some example sound generators

// These receive a value from 0.0-1.0 indicating the position in the repeating samples to generate

// and return a value from -1.0 to +1.0 indicating the volume at the current sample position

typedef std::function<double (double)> GeneratorType;

class Generators

{

public:

// Sine wave

static double Sine(double samplePos)

{

return sin(samplePos * M_PI*2);

}

// Sawtooth

static double Sawtooth(double samplePos)

{

if (samplePos == 0)

return 0.f;

return (2.f / samplePos) - 1.f;

}

// Square wave

static double Square(double samplePos)

{

return (samplePos < 0.5f? 1.f : -1.f);

}

// White noise ("static")

static double WhiteNoise(double samplePos)

{

return static_cast<double>(rand()) / RAND_MAX;

}

};

// Class which generates audio according to the specified function, frequency, sample rate and volume

class Generator

{

private:

// The sound

Song sound;

// Sample rate

int const sampleRate;

// Number of channels

int const channels;

// Length of sample in seconds before it repeats

int const lengthInSeconds;

// Frequency to generate

int const frequency;

// Volume (0.0-1.0)

float const volume;

// The function to use to generate samples

GeneratorType generator;

// How many samples we have generated so far

int samplesElapsed;

public:

// Constructor

Generator(SimpleFMOD &fmod, GeneratorType generator, int frequency, int sampleRate, int channels, int lengthInSeconds, float volume)

: generator(generator), frequency(frequency), sampleRate(sampleRate), channels(channels),

lengthInSeconds(lengthInSeconds), volume(volume), samplesElapsed(0)

{

FMOD_CREATESOUNDEXINFO soundInfo;

memset(&soundInfo, 0, sizeof(FMOD_CREATESOUNDEXINFO));

soundInfo.cbsize = sizeof(FMOD_CREATESOUNDEXINFO);

// The number of samples to fill per call to the PCM read callback (here we go for 1 second's worth)

soundInfo.decodebuffersize = sampleRate;

// The length of the entire sample in bytes, calculated as:

// Sample rate * number of channels * bits per sample per channel * number of seconds

soundInfo.length = sampleRate * channels * sizeof(signed short) * lengthInSeconds;

// Number of channels and sample rate

soundInfo.numchannels = channels;

soundInfo.defaultfrequency = sampleRate;

// The sound format (here we use 16-bit signed PCM)

soundInfo.format = FMOD_SOUND_FORMAT_PCM16;

// Callback for generating new samples

soundInfo.pcmreadcallback = PCMRead;

// Callback for when the user seeks in the playback

soundInfo.pcmsetposcallback = PCMSetPosition;

// Pointer to the object we are using to generate the samples

// (our callbacks query this data to access a concrete instance of the class from our static member callback functions)

soundInfo.userdata = this;

// Create a user-defined sound with FMOD_OPENUSER

sound = fmod.LoadSong(nullptr, nullptr, FMOD_OPENUSER, soundInfo);

}

// Start playing

FMOD::Channel *Start()

{

return sound.Start();

}

// Get reference to sound

Song &Get()

{

return sound;

}

// Change the sound being generated

void SetGenerator(GeneratorType g)

{

generator = g;

}

// FMOD Callbacks

static FMOD_RESULT F_CALLBACK PCMRead(FMOD_SOUND *sound, void *data, unsigned int length);

static FMOD_RESULT F_CALLBACK PCMSetPosition(FMOD_SOUND *sound, int subsound, unsigned int position, FMOD_TIMEUNIT postype);

};

// Generate new samples

// We must fill "length" bytes in the buffer provided by "data"

FMOD_RESULT Generator::PCMRead(FMOD_SOUND *sound, void *data, unsigned int length)

{

// Get the object we are using

// Note that FMOD_Sound * must be cast to FMOD::Sound * to access it

Generator *me;

((FMOD::Sound *) sound)->getUserData((void **) &me);

// Get buffer in 16-bit format

signed short *stereo16BitBuffer = (signed short *)data;

// A 2-channel 16-bit stereo stream uses 4 bytes per sample

for (unsigned int sample = 0; sample < length / 4; sample++)

{

// Get the position in the sample

double pos = me->frequency * static_cast<float>(me->samplesElapsed) / me->sampleRate;

// Modulo it to a value between 0 and 1

pos = pos - floor(pos);

// The generator function returns a value from -1 to 1 so we multiply this by the

// maximum possible volume of a 16-bit PCM sample (32767) to get the true volume to store

// Generate a sample for the left channel

*stereo16BitBuffer++ = (signed short)(me->generator(pos) * 32767.0f * me->volume);

// Generate a sample for the right channel

if (me->channels == 2)

*stereo16BitBuffer++ = (signed short)(me->generator(pos) * 32767.0f * me->volume);

// Increment number of samples generated

me->samplesElapsed++;

}

return FMOD_OK;

}

FMOD_RESULT Generator::PCMSetPosition(FMOD_SOUND *sound, int subsound, unsigned int position, FMOD_TIMEUNIT postype)

{

// If you need to process the user changing the playback position (seeking), do it here

return FMOD_OK;

}

// Program entry point

int main()

{

// The sample rate, number of channels and total time in seconds before the gnerated sample repeats

int sampleRate = 44100;

int channels = 2;

int soundLengthSeconds = 5;

// Types of sound generator

GeneratorType generators[] = {

Generators::Sine,

Generators::Sawtooth,

Generators::Square,

Generators::WhiteNoise

};

// Which generator to use

int generatorId = 0;

int numGenerators = 4;

// Frequency to generate (Hz)

int frequency = 800;

// Volume level (0.0 - 1.0)

float volume = 0.3f;

// Set up FMOD

SimpleFMOD fmod;

// Set up sound generator

Generator generator(fmod, generators[generatorId], frequency, sampleRate, channels, soundLengthSeconds, volume);

// Start playback and get the channel and a reference to the sound

FMOD::Channel *channel = generator.Start();

Song *sound = &generator.Get();

// Print instructions

std::cout <<

"FMOD Sound Generator Demo - (c) Katy Coe 2013 - www.djkaty.com" << std::endl <<

"==============================================================" << std::endl << std::endl <<

"Press:" << std::endl << std::endl <<

" G - Change sound generator" << std::endl <<

" P - Toggle pause" << std::endl <<

" Q - Quit" << std::endl << std::endl;

bool quit = false;

while (!quit)

{

// Update FMOD

fmod.Update();

// Print statistics

if (channel)

{

unsigned int ms;

unsigned int lenms;

bool paused;

paused = sound->GetPaused();

channel->getPosition(&ms, FMOD_TIMEUNIT_MS);

sound->Get()->getLength(&lenms, FMOD_TIMEUNIT_MS);

printf("Time %02d:%02d:%02d/%02d:%02d:%02d : %s\r", ms / 1000 / 60, ms / 1000 % 60, ms / 10 % 100, lenms / 1000 / 60, lenms / 1000 % 60, lenms / 10 % 100, paused? "Paused " : "Playing");

}

// G - Change generator

if (GetAsyncKeyState('G'))

{

sound->Stop();

generatorId = (generatorId + 1) % numGenerators;

generator.SetGenerator(generators[generatorId]);

sound->Start();

while (GetAsyncKeyState('G'))

;

}

// P - Toggle pause

if (GetAsyncKeyState('P'))

{

sound->TogglePause();

while (GetAsyncKeyState('P'))

;

}

// Q - Quit

if (GetAsyncKeyState('Q'))

quit = true;

}

}

I hope you found this tutorial useful! In Part 6 we’ll look at how to capture and record real-time sound card output, and optionally perform frequency analysis on it. Please leave feedback below.

Very useful tutorial, thanks! I’m working on a procedural music generator and your tutorial made the first step quick and easy. 🙂

Hi, Thanks for sharing this tutorial, but now I need your help. I want to save pcm stream file from mp3 audio file. like to convert mp3 to pcm file using fmod. can you please help me out? How can I achieve this?

Thanks

Kabir

Hi! Thanks for sharing your details. I am working on a similar setup as yours, for audio synthesis of collision sounds. I wonder if you happen to know of any way to detect when the buffer runs out, caused by a sudden spike in the sound generation execution time, for example. I want to measure this as part of measuring the performance of my sound synthesis.

I have been looking at Sound::getOpenState, but this always reports non-starving and a Playing state, and might simply not work for user generated sounds. Do you have any ideas?

Once again, thanks for providing all this information, especially the part where you show how to use a member function as callback, which I had no idea about previously.

I seem to be experiencing some difficulty getting the buffer the size I want it to.

I’ve been trying to make the size of the stream buffer the exact number of samples in 4 beats of a certain tempo. Essentially, the buffer should be the size of 4 beats(1 bar).

I’m confident I’ve got all the math figured out, I’m struggling, however, to find extensive documentation on the following EXINFO members:

decodebuffersize

length

As well as the function:

setStreamBufferSize()

Modifying any of these doesn’t seem to change anything. Regardless of what I do, the datalen parameter in my PCMRead callback defaults to 16384.

length is the absolute size in bytes of the sound.

decodebuffersize controls the callback buffer size, in PCM samples.

Don’t forget to create the sound with FMOD_OPENUSER!

Thanks again for both your advice which has been extremely helpful.

I’ve been working on a very simple synth that just uses FMOD to produce various pitched sine waves depending on the MIDI key pressed.

Here’s what I found which ir related to the artifacts/tearing/wave-position stuff you were discussing above:

I have a class that sets up and controls my FMOD stream that calls the callback function that I’ve also called PCMRead.

The class has “phaseposition”, “volume” and “midinotenumber” members that I can set any time and that can be read by the PCMRead callback function (by virtue of passing a reference of the object in using the FMOD userdata functionality (as explained by Katy above – thanks)).

The PCMRead function always looks to the FMOD object “phaseposition” member for its phase position so it doesn’t ‘forget’ where it was in the wave between calls. I’m hoping this will avoid any clicking associated with jumping to diffrent positions in the wave.

The main program just creates an object of this class and waits for MIDI notes.

When I get a MIDI note on I write the MIDI note number to the object’s “midinotenumber” member.

In the PCRead callback function I have the usual loop that generates the samples. Within this loop and for each pass of it I re-calculate the phase increment using the latest “midinotenumber” (which I convert to a frequency) read form the object.

This obviously means that the frequency can change mid loop but since I recalculate the phase increment and add it to the running “phaseposition” member I think it avoids the clicks associated with jumping to a new point in the wave.

(Please let me know if I’ve missed something here).

On top of all this I use the “volume” member within the PCMRead function to cut and restore the volume for notes on and off.

The trouble is it still clicks at the start and end of notes.

I tried making the MIDI note irrelevant and the volume just toggles between 0.5 and 1 when a note is pressed.

So effectively the only thing changing is the value of the volume I use when calculating each sample within the PCMRead loop:

But clicking is still present.

I tried switching to using the FMOD channel->setPaused(true); & false for note on and off but that also still causes clicking when pausing or resuming a continous tone at the same volume.

Have either of you found FMOD to be ‘clicky’?

The frequency-tracking technique you describe here is essentially the same algorithm (but with a different implementation) to my first comment at the top of this thread in response to David, so as far as I know you’re not missing anything there 🙂

As David mentioned above, you can eliminate the clicks when a sound starts or stops by fading it in and out very quickly, in the order of a couple of ms or less (experiment to find a good value). There is also the possibility that your sound card driver is the problem, however if you are making software for other people, you have to assume many of them will have buggy sound driver’s as well and compensate for it. Try using a quick fade when you start and stop a note and see if that helps.

I’ll try the fade.

I actually managed to reduce the clicking a fair amount by detecting when the volume changes and using an if loop to only not implement the change in volume when the actual sample value is near zero.

This has helped a lot. However the clicking is still noticeable a higher pitches which I am assuming is due to the higher frequency leading to more abrupt changes.

I’m sure I’ll be able to use fade to remove these last bits.

Also the sound card I am using is a basic on board system so is likely to not be the best quality as well.

Anyway, thanks for all the help.

Ben

Higher frequency does as you said mean the waveform swings faster. No problem on the help, thanks for the update 🙂

This is all great info, thanks. I’ve been trying to produce a simple synth and I’ve run into the problem David mentioned above (I think) about the buffer retaining data.

I’m using MIDI and when I play from one note to the next the previous note sounds for the duration of (what sounds like) decodebuffersize before switching to the new correct pitch. I want to try clearing the buffer but I can’t see how to do it. I need some reference to the buffer to use in a memset but don’t know what to use. Do either of you have any ideas?

If you’re doing it from inside the callback based on the code above you can do something like:

memset(data, 0, length);

I looked through the API documentation and couldn’t find an obvious way to access the decode buffer outside the callback, so I’m not sure how to clear it at an arbitrary moment in time. David? 🙂

I also do not know a way, but I don’t think it’s really necessary!

What I do is keep a collection of sound sources, which are all types of objects that know how to write to a buffer (mixing their values with what’s already there). For example, each voice in a synthesizer could be considered a sound source.

Then inside the callback I start by clearing the buffer using a memset, and then iterate over every sound source, telling them to write to the buffer in turn.

Alternatively you could keep a local buffer, do all operations on the local buffer, then the callback would simply copy from the local buffer to the decode buffer.

Using this approach, I would even recommend using a floating point local buffer, because it makes calculations and mixing easier without having to deal with overflows. Then at the end, convert the samples to the correct format on the callback.

Thanks both for your very helpful advice. I’m just thinking it all through now and working out a new way of doing this.

If I find anything interesting I’ll let you know.

Thanks again for your help.

Hello again Katy!

I was playing around with some low-level audio programming today, and ran into two gotchas that I thought I should share.

1) The buffer passed to the PCM read callback retains its data between calls, so if you are writing a sound to the buffer, and then want it to stop playing, it is not enough to stop writing to the buffer. You actually need to clear the buffer (with a memset for example) to zero.

2) The value of the decodebuffersize attribute has some big implications on the latency, or how interactive the application is.

I was using a size of 44100 arbitrarily and there was always a large delay behind every operation I tried to perform on the audio (such as starting or stopping a sound, or changing volume).

When I realized the problem, I changed the value to 4410, for a latency of 100ms, which is a lot better than 1 second.

When I use audio recording software, using ASIO drivers, I usually set the buffer size to 256, which corresponds to a latency of 5ms at 44100 Hz. I tried that in FMOD, but it was far too low for the sound to play without gaps. But at a size of 1024 it sounded okay already

The above is all good advice for anyone reading this.

Regarding (1), does clearing the buffer to zero cause a click when playback stops?

Regarding (2), it really depends on the speed of the user’s computer. I was aware of the latency when I brutally copy/pasted your code; I think for things like video games you don’t want the user tinkering with such values so it is better to err on the side of caution and just set the value fairly high where the latency is not critical. If you are writing the next FL Studio, Ableton Live or a wave editor, you are of course going to want the lowest latency possible. For those types of applications I would suggest allowing the user to set the latency in a dialog box (preferably converted to milliseconds or some other time unit rather than in raw bytes), so they can tweak it to the power of their own machine.

It might, although I do not recall noticing a click specifically. But I think that virtually any sound that gets cut off abruptly will sound a bit harsh. The same thing can be said about suddenly starting a sound.

The solution is naturally to fade out (or into) the sound before stopping (or starting). When synthesizing sounds, this portion is usually handled by an envelope, with the attack and release portions of the envelope taking care of the fading for us.

Even a quick fade (e.g. a couple of miliseconds long) can help to prevent sound artifacts without any impact on the nature of the sound.

Great article, well done 🙂

I’d like you to try something with this sample. Try to smoothly change the frequency value while the sound is playing. Does the pitch change smoothly, or do you hear sound artifacts? At first sight I think it might not work correctly.

This is a problem I ran into when I wrote my series on synthesizers and tried to implement pitch bending into it. Apparently sampling the sine wave as follows (which is what I got after looking up the basic sine wave formula on wikipedia without further research):

int n = 0;

for(int i = 0; i < samplesCount; ++i) {

float value = sin(frequency * n / samplingRate * TwoPi);

n += 1;

}

Produces gaps in the sound wave when you're trying to change frequency. I have recently read about this very problem on The Audio Programming book, and apparently the correct way to calculate this (and other waves) is:

float phase = 0.0f;

for(int i = 0; i < samplesCount; ++i) {

float value = sin(phase);

phase += frequency / samplingRate * TwoPi;

}

A subtle difference, which I'm still not sure I fully understand, but it did solve the problem. For a more efficient and robust implementation the book also suggests that:

– You cache TwoPi / samplingRate as that won't change.

– Store frequency / samplingRate * TwoPi in an increment variable and only update it when frequency changes.

– Optionally wrapping phase to the 0-TwoPi range. The reasons given for this are that some waveforms actually require this wrapping, and that some compilers' implementations of Sin degrade as phase becomes too large.

I see the problem. I’m not quite what you mean by gaps but just for clarity to anyone else reading, the gaps David refers to aren’t temporal gaps of silence, it is a clicking/tearing sound caused by jumping from one point in a sine wave to another.

David: as you probably figured out, if you are for example 1/4th of the way into generating samples for a sine wave, then say, double the frequency, but stay at the same relative position along time (in radians), the next sample you generate will be half-way through the first sine wave (of the doubled frequency), effectively taking you from the peak of the original sine wave (max volume) to the centre point (silence) of the new one. That’s what causes the clicks.

I don’t like either of the pieces of code above. Both assume that the callback is always starting to fill from the beginning of a sine wave, which is only the case if you set decodebuffersize to a value that is a precise multiple of the sample length of the sine wave of the frequency you want. The second snippet is worse than the first; the difference between them is that the second one introduces a cumulative floating point error over the duration of the fill. If the fill is long enough, this will incorrectly increase the frequency of the wave. If phase is declared static, the error will accumulate over the lifetime of the audio (I actually experienced this problem when I was making the sample code in the article, so I changed it to use the first method – if you used the second method and just left the audio running for a few minutes, it would very slowly pitch bend up).

The correct solution to this (in my opinion only 🙂 ), which I’ve tested by modifying the sample code, is to store your previous relative position in the sine wave, and when you change the frequency, reset the sample-generated count to zero (no offset from the beginning of the new sine wave), and then add the previous relative position on when calculating the actual current position:

FMOD_RESULT Generator::PCMRead(FMOD_SOUND *sound, void *data, unsigned int length){

static double prevPos = 0.f;

...

prevPos = me->frequency * static_cast<float>(me->samplesElapsed) / me->sampleRate + prevPos;

prevPos -= floor(prevPos);

me->frequency += 10;

me->samplesElapsed = 0;

// A 2-channel 16-bit stereo stream uses 4 bytes per sample

for (unsigned int sample = 0; sample < length / 4; sample++)

{

double pos = me->frequency * static_cast<float>(me->samplesElapsed) / me->sampleRate + prevPos;

// Modulo it to a value between 0 and 1

pos = pos - floor(pos);

...

*stereo16BitBuffer++ = (signed short)(me->generator(pos) * 32767.0f * me->volume);

...

// Increment number of samples generated

me->samplesElapsed++;

}

Obviously this is not the right place to increment the frequency, I just include that above for illustration purposes. My code is using a range of 0-1 to indicate the position in the wave rather than 0-2PI, but otherwise the principle is the same. This gives a steady glitch-free increasing pitch.

Regarding your other points:

– If 2PI and samplerate are defined const as in the minimal example in the article, an optimizing compiler should calculate the expression at compile-time and the ‘caching’ is done for you (it does of course, depend on the compiler, and the order you specify the expression variables; I’m not a compiler expert, but I’m sure it doesn’t hurt to explicitly calculate it in code and put it in a const).

– The second example in the article does the phase wrapping you speak of, except it wraps from 0-1 and scales by 2PI for the sine wave. The sawtooth and square wave functions in the article expect values from 0-1, they both require this wrapping to work properly.

Hope that makes sense:)

Ok just an additional comment on why the 2nd piece of code may have worked for you (it didn’t for me): if your application uses the 2nd method and changes frequency in another thread while the sample fill loop is executing, the 2nd method basically has the same effect as the solution I showed in my previous comment. In the first method, when the frequency is changed, you are multiplying your position in the sine wave by the current frequency (which will induce a sudden shift when the frequency is changed); in the second method, the frequency is a built-in component of the addition to phase: when frequency changes, phase stays the same, which is what you want, and is how my solution also works, but is not how the 1st method works.

Hello Katy,

Oops, forgot to check the option to get notifications by e-mail.

I don’t have much time to look into this matter at the moment, but I’d like to add some clarification You wrote:

“Both assume that the callback is always starting to fill from the beginning of a sine wave, which is only the case if you set decodebuffersize to a value that is a precise multiple of the sample length of the sine wave of the frequency you want.”

My bad! What I actually intended in my example was for “n” and “phase” to live as part of the state of the oscillator, and to keep accumulating every time the callback was called (with proper wrapping to prevent overflows), not to be reset every time.

Was the only problem you had with the second example the floating-point errors accumulating over time? Would the use of a double instead of a float solve the problem? I’ll have to look further into this when I have the time, but the second solution is the one I’ve seen described in the bibliography by two different authors, and seemed to work fine for me when I implemented it one year ago.

I might have messed something up when writing my comment though. Will run some tests soon 🙂

By the way, that’s a very nice explanation! Thanks.

Sorry for the spam, too bad there’s no edit button in the comments 😛

Just wanted to say that I’ve looked again at the book, and noticed that it really does use doubles for storing the phase and the increment. Only the output buffer is using floats. I might have overlooked that because there was no mention in the text, but it seems like it might be an important implementation detail in order to prevent the issues you found.

I’m a bit occupied with other stuff right now too, but yes, the only problem with the 2nd example is the floating point error. You can fundamentally never eliminate the problem completely as a binary number always has a limit on accuracy (it has been a complete pain in the ass in the platform game collision detection code too), however a double is 64-bit and a float is 32-bit if I remember right, so yes, the problem should be magnitudes less at the worst. Do let me know if you get chance to try it 🙂 (I tried to find an option in WordPress after reading your comment to let users edit their comments but it doesn’t seem to be possible with their web-based solution, only when you use your own server sadly)